Application security as a whole requires an overarching view of all your products and how their vulnerabilities might be made worse or better in relation to each other. However, an individual assessment will be limited to its defined scope. So I’m going to talk about what you should probably know before going into API Security Assessments as an observer and not a tester. Some of this is generally applicable to application security as a whole, but my focus will be API security.

We will be looking at each of the following questions in this post. So be forewarned that it will be a little long. But you should be able to refer to individual sections depending on what interests you the most.

- What are APIs?

- What role do APIs play in Application Security?

- What happens in Application Security?

- What are the consequences?

- What could cause a compromise?

- Why is this cause so frequent?

- How can you make others care about application security?

- How should you interpret an API security assessment?

- Conclusion

Without further adieu, let’s dig in.

What are APIs?

APIs, or Application Programming Interfaces, allow communication between different applications. They provide a protocol or a contract that tells consumer applications two things.

- Presenting readable data – so that API knows what to process, in which order, using what function

- Reading data presented back – so the consumer service can make use of API’s service

There are several types of APIs. Some of the common ones used are REST APIs with JSON syntax, SOAP APIs with XML syntax, and GraphQL where the response format is defined by the client in the request itself. You will see that GraphQL syntax is somewhat of a derivative of JSON structures, but not exactly JSON when it comes to forming the request.

Why do we need APIs?

Cross-dependence of businesses

APIs are a necessity because it’s very rare that business cases nowadays are independent and self-sufficient. For example, if you need to implement an online shopping store, you need a way to talk to a payment processing entity.

Functional requirements

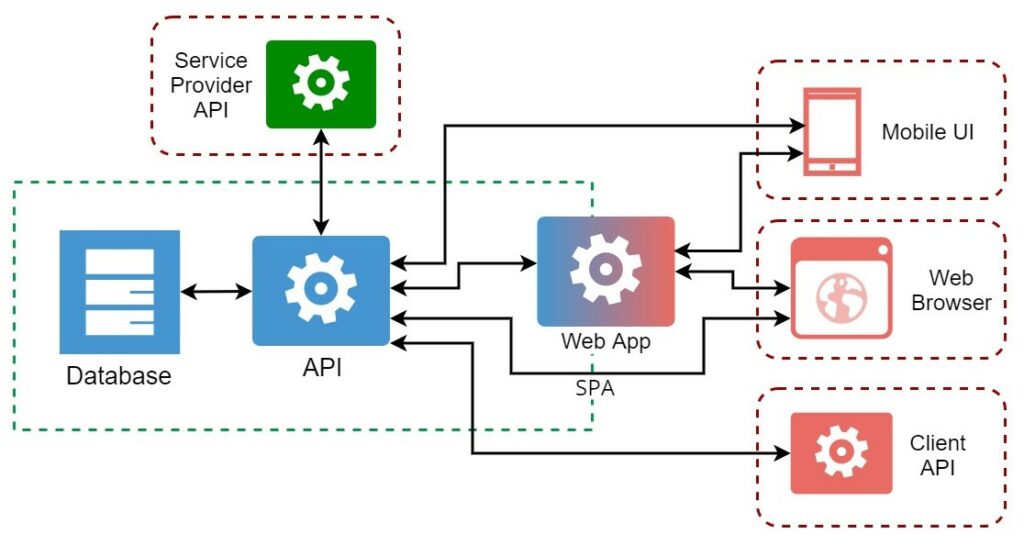

If you refer to the above diagram you see that both mobile and web applications talk to the API, either directly or through the Web Application. Additionally, mobile applications might have different implementations for different platforms (iOS, Android). When multiple applications talk to a database individually, there could be data conflicts and race conditions. There has to be a common gateway where these requests can be sequenced. That’s where APIs can come in.

Similarly, Single Page Application (SPA) development is also facilitated by APIs, where asynchronous requests manipulate the user interface rather than dynamic pages being generated on user clicks and submissions.

3. Maintainability

The popularity of APIs is also attributed to the benefits of microservices. They help developers to implement modular, independent, and reusable functions, which can be assembled into a large or complex application that is maintainable.

What role do APIs play in Application Security?

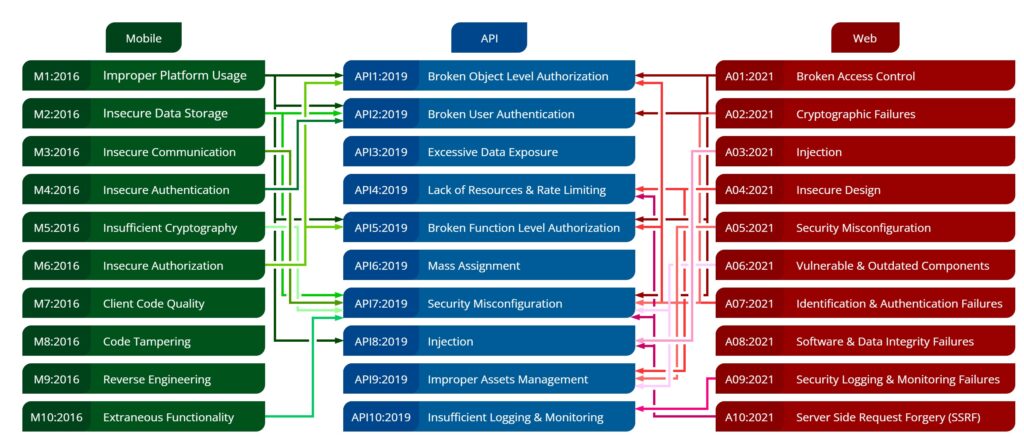

If there was an obvious takeaway in the preceding discussion, it is that APIs are not isolated entities but rather a component in application security. In the above diagram, we have an overview of how OWASP top 10 security risks of mobile API and web applications are interconnected. This representation is not a one-to-one mapping, but rather a representation of whether a category from mobile or web top 10 risks intersect with a category in API top 10 risks.

This clearly shows why we can’t talk about APIs alone when considering the state of security, but rather application security as a whole.

The good news is that whatever controls you apply at the API level will mitigate a subset of security issues from Mobile and Web apps that consume the API. This doesn’t mean that everything can be controlled at the API level. You can see that some categories have no links to each other at all. But the security controls you apply at the API level will propagate to both mobile and web security when they consume the API, which might save you a lot of man-hours in bug fixing.

As an extension of this, it’s worth noting that the low-risk vulnerabilities in one domain, can be combined with a range of vulnerabilities from other domains to produce higher risk vulnerabilities.

What happens in Application Security?

This is a loaded topic, and people better than me have done it better. But for the sake of argument, I would like to give a snapshot of something that happened recently which encapsulated the unpredictability of this domain very well.

In mid-November 2021, a user registration procedure of the FBI’s Law Enforcement Enterprise Portal (LEEP) was compromised to send fake “cyber attack” emails to thousands of email addresses [1]. This was a false alarm in this particular case. But there was potential for credential harvesting via phishing, and of course the reputation damage.

What’s interesting here was that this attack was done to damage the reputation of a security researcher who exposed a hacking group, which as you can see does not have much to do with the FBI.

The takeaway here is that attackers are not at all predictable, and even if your organization does not handle user data or financial data and has excellent public relations, if a vulnerability is exploitable someone somewhere is going to find a reason to exploit it. Therefore you should be prepared for a very diverse set of threats.

What are the consequences?

This again is a broad topic. But considering the diversity of attacks highlighted in the earlier incident, let us look into some instances where actual financial losses had occurred. And it might not always be how you’d think.

2018 saw the implementation of the General Data Protection Regulation (GDPR) by the European Union. GDPR aims to increase individual control of personal data, both inside and outside EU and European Economic Areas.

According to MicroFocus’s 2019 Application Security Risk Report [2], from the compromises that occurred in 2018 itself, 2/4 (50%) incidents were due to insufficient application security. 2 more incidents were not fully processed at the time of publication of the report.

The overall 4 incidents are summarized below.

| Compromise | Fine | Cause |

|---|---|---|

| Centro Hospitalar Barreiro Montijo, Portugal | € 400,000 | Unauthorized Access of patient data by staff |

| Knuddels, Germany (A chat application) | € 20,000, €10 million fine avoided by early detection and reporting | Data leakage (username/password), Plain-text Passwords |

| British Airways | £ 20 million | Client financial data leaked through XSS in payment page "BBC News. 2021. British Airways fined £20m over data breach. [Online] |

| Facebook “View as” feature | Fine circumvented due to certain technicalities | Unauthorized Access due to an access token related implementation flaw "GDPR.eu. 2021. The GDPR meets its first challenge: Facebook – GDPR.eu. [Online] |

As you can see, the consequences that come in terms of an actual fine are not light. It should also be noted that implementing logging, monitoring, and alerting solutions could help you minimize the potential damage and how much you stand to lose.

If you’re someone from Sri Lanka like me, you might be pretty unconcerned about GDPR. But not so fast! We will also be getting data protection legislation in the near future.

So you can see that sooner or later, implementing proper application security controls is going to be a necessity for everyone.

What could cause a compromise?

Bugs. But not just that.

Let me elaborate. First of all, let’s look at some data for 2021 provided by HackerOne and Bugcrowd, two bug bounty programs, and data for 2018 provided by MicroFocus, who uses their Fortify on demand (a SaaS testing solution by MicroFocus) vulnerability data.

- Hacking APIs increased by *694%* compared to the previous year

- What hackers work on the most

- Websites (96%)

- APIs (50%)

- Android applications (29%)

- iOS applications (18%)

- Causes

- Misconfigurations reports up by 310%

- Improper Access Control and Privilege Escalation reports up by 53%

- API vulnerability submissions doubled

- Higher investment in bug bounty hunting

- 50% increase in submissions

- Code quality and API abuse issues have roughly doubled over the past 4 years

- API Abuse Problems in 2018

- 52% Mobile Apps

- 35% Web Apps

- Causes

- Problematic input validation

- Security Feature bugs in 94% of Web Apps

If we look at all this data together, HackerOne says hacking APIs has increased by 694% compared to only the previous year. Bugcrowd also notes that API vulnerability submissions have doubled. Considering that the preceding report by MicroFocus says API abuse issues in 2018 have doubled over the past 4 years, this up to date is not surprising at all.

Similarly, 50% of bug bounty participants focus on hacking APIs, second only to websites. 96% of hackers work on websites and 47% work on mobile apps. But if you look at MicroFocus’s data again, 35% of web apps and 52% of mobile apps in 2018 had API abuse problems.

Overall, the investment in bug bounty hunting has increased. With the pandemic, hackers have more time to invest in bug bounty hunting, and with the subsequent remote working conditions, companies have more incentive to secure their products.

All of the aforementioned sources have listed certain common causes. But I want to highlight Bugcrowd’s observation that most of these bugs are due to repeated and avoidable errors. Let us look at what causes these bugs to be there, and be there so regularly as well?

Why is this cause so frequent?

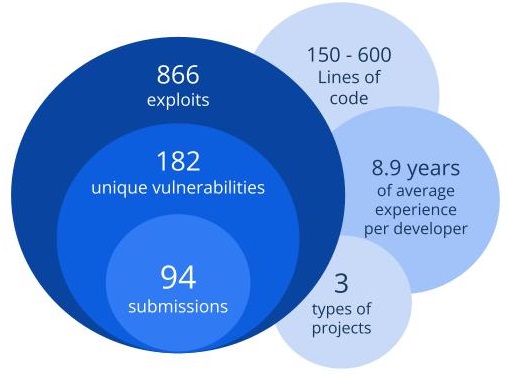

In 2020 by a group of researchers from the University of Maryland conducted a study on understanding security mistakes developers make. They gathered data from a Secure Programming Contest, “Build It, Break It, Fix It” [8] and conducted a qualitative analysis.

3 types of projects were given to small groups of participants to develop, each of which had different requirements and both implicit and explicit security concerns.

Once the projects were completed, the source code was made available to other groups to find vulnerabilities. Vulnerabilities found were fixed in the final phase.

In terms of vulnerabilities found, authors found that vulnerabilities due to lack of security knowledge occur 4 times more than common implementation mistakes. They consisted of two subcategories, misunderstood security concepts, where the developers were aware of what to do, but made errors in technology selection, configurations or variable initiation, and lack of implementation, where developers did not realize a security requirement existed and therefore avoided implementing it. While these types of mistakes are common, the authors note that these bugs are rarely exploited. In contrast, coding mistakes were noted to be easy to find, exploit and fix.

Misunderstood Security Concepts

Hard to find, rarely exploited

- Bad Choices (12%)

- Weak crypto algorithms

- Weak hash algorithms

- Homemade Crypto

- Weak access control design

- Conceptual Errors (27%)

- Insufficient randomness

- Fixed values

- Disabled protections

No Implementation

Easy to find, rarely exploited

- Missed implicit security requirements

- Missing access/integrity controls

- No replay check

Coding Mistakes

Easy to find, Easy to exploit, Easy to fix

- Insufficient error checking

- Uncaught errors

- Control flow mistakes

The authors’ recommendations for attending to these issues are to improve secure programming with input from a security expert, aspire for a simpler design where security-related code is not duplicated, maintain better documentation, automated vulnerability detection to avoid “Mistakes”, and finally, security education for developers.

There are lots of nuanced details to consider when interpreting this data. For example, how relatable the findings will be in a real-world situation which the authors themselves discuss at length. I encourage you to check out the original paper if possible. However, I would like to point out a couple of things.

Given that lack of security knowledge related vulnerabilities are more common than mistakes, you would think that security education has to be prioritized. But their analysis shows that the participant experience/expertise on these issues has very little impact. Additionally, they note that while experienced developers will choose more optimal technologies that may lead to lesser vulnerabilities, their experience does not have a direct impact.

This is an important distinction to note when answering our question, “why are bugs so frequent?”

In practice, when doing API vulnerability assessments, what we notice as the cause for security vulnerabilities is the difference in priorities of people assessing and developing the application. Software Engineers and a Security Tester will have vastly different priorities. With agile methodology and minimum viable product delivery, developers will prioritize functionality. And with tight delivery schedules, they might be prone to implementing security controls wrong without proper understanding or forgetting them entirely.

Given that this situation is unlikely to change, what would improve your security hygiene is not necessarily bombarding developers with additional training, but utilizing a “single responsibility” principle, where design is done with input from security experts and using tools for early detection of common “mistakes”. This scenario is properly summarized in the below quote.

This leads us to our next question, which is how you can get different stakeholders to care about investing time in integrating security into SDLC?

How can you make others care about application security?

To start with, there are uncertain benefits that everyone cares about, most of which we have already discussed.

Uncertain benefit later

- Early detection and early reporting could reduce fines significantly

- Minimized damage when/if another part of security infrastructure is compromised

- Attackers are versatile and adaptable

- Perimeter Security is not a swiss army knife

- Insider Threats

- Man In The Middle

- Social Engineering

- Reduced risk of reputation damage

- Continued trust of business partners

But one might be unable to invest more time and money in security just because of these since you can’t know how much money was saved until something happens. And if you’ve invested properly then something will not happen.

However, if we shift our point of view a little, you can also see certain tangible benefits.

Certain benefit now

- Faster feature release

- Reduced downtime

- Reduced customer complaints

- Man-hours saved

- Reduced cost

- Maintaining security standards certifications

These benefits are all interconnected. What often happens, however, is that they are not captured in any KPIs, at least not with a focus on security fixes. Let us look at some data.

The infographic you see above is from Veracode’s 2020 State of Software Security report [9]. The yellow and blue factors represent Nurture and Nature factors respectively (Original report will provide a more thorough explanation. Please note that “SAST through API” refers to building security testing into the development pipeline, not the API Service implementations we’ve been discussing).

If we look at the “Nature” factors (blue) which are factors that are out of developers’ control, it shows that factors such as larger applications and higher flaw density will increase the time it takes to close half of the observed bugs by as long as two months.

Code is written for reuse, and the more you reuse the more man-hours you invest in the initial implementation. So if you exclusively depend on post-discovery patching, it will make the application larger and increase the flaw density with time. Bugs will remain unresolved for longer, as the infographic shows. You can see what sort of impact this will have on costs where man-hours are concerned. So taking time to design securely might not be the enormous cost that everyone thinks it is.

Let’s get a little point of view specific and see what aspects of application security will appeal to different stakeholders.

Developers

- Simpler design will cause reduced technical debt and reduced security debt.

- Single Responsibility Principle (SOLID) can be Security’s Minimal Trusted Code

- Open–closed principle (OOP, SOLID), will have to be violated if you’re trying to integrate input validation after an assessment conducted on a UAT environment. It would be better to obtain input from a security expert during the design period itself.

- Reuse and evolution

- Adaptability with backend technologies – Proper design can facilitate switching between technologies easily per evolving business needs without worrying for additional patching

- No need to reimplement different injection blacklists because you changed the database technology and the query language is different now

- Mistake-proofing junior developer code – If your connected components already has security controls, developers can learn from errors responses and make fewer mistakes.

- Adaptability with backend technologies – Proper design can facilitate switching between technologies easily per evolving business needs without worrying for additional patching

- For APIs specifically, you can kill many birds with one stone.

- Security controls extend to both mobile and web applications.

- Still, do not forget the other birds in the sky!

In our earlier discussion regarding why bugs are frequent, we saw that developers do grasp security concepts wrong and make mistakes, or they tend to miss certain implicit security requirements. But if this is integrated into the design, and if the user story itself has security concerns outlined, they will have to be implemented. This yet again will help junior developers to adapt faster and write secure code from the get-go.

Customers / Product Owners / Project Managers

- Fixes might introduce new bugs.

- This would cause the delivery deadlines to be extended further. (Refer earlier infographic by VeraCode)

- Post-implementation patching takes critical man-hours away from production bugs

- When it comes to after-the-fact implementations, even the most simple bug fixes could be exhaustive. You might need to refactor hundreds of places. And since security was not a design concern, this time will have to be allocated after production deployments, then and there as they are found. This could be avoided if security controls were thought about during design phase.

How should you interpret an API security assessment?

Convincing stakeholders to invest in security is well and good, but to recognize what risks are acceptable and what vulnerabilities should be mitigated immediately, you must know how to interpret the output of your security assessments. This is a somewhat interesting topic when it comes to APIs because considering their impact on any other applications that consume them, you should be aware of what the results you’re getting actually mean.

External Tester Perspective

For APIs, the tester will get full access to consume the API itself. The business flows of the different API endpoints will be known, and the documentation will be made available to the tester.

However, the tester will not have any idea about the internal business logic, nor any internal technologies or libraries that the application might be using. A caveat here is that sometimes such information can be accidentally exposed through errors, which is a low-risk vulnerability in itself.

It’s not just you

The tester will consider how your API could be attacked, but that’s not all. They will also consider how the API could be abused to attack a potential consumer.

Limitations

API Security Assessments are time-constrained, which leads to only subsets of APIs being tested at a time. The risk here is that the API flow might not be complete for a given scope, and therefore visibility of interconnected vulnerabilities might vary.

There could also be limitations in providing a proof of concept. Considering the limitations of the scope, the risk assessment someone can assign will differ. APIs will not be tested in combination with mobile applications or web applications. Therefore some protection measures or attack vectors will be intangible. API Tester will not know how you use local storage through the mobile app, or what the mobile application code may expose. Any such risk combined with certain low-risk API vulnerabilities could collectively pose a higher threat. Conversely, there could be an additional encryption later on top of HTTPS between the mobile app and the API, which could eliminate some of API specific vulnerabilities.

These proof of concept limitations are why an established risk management procedure is highly advised.

What should you keep in mind?

- Be prepared for the application to evolve.

- Track and manage accepted risks, low or otherwise

- Risk could be low due to subset-based testing

- Risk could be higher when considering mobile and web applications security testing outputs

- Threat actors could be implicit and internal

- An external API you’re connecting to could be compromised

- Disgruntled employee with access to internal systems

- Innocent employees with compromised devices

- Depending on risk category, the tester will assume different attacker motivations

- Stealing personal data

- Denial of service

- Attacking a consumer program through your API

- First and foremost, protect your component

Conclusion

We have gotten through all our questions, and I hope now anyone can place API vulnerabilities in the context they were found, and manage them accordingly with respect to the security practices of their organization. To summarize the themes of our discussion,

- Application Security is an investment.

- Relying exclusively on perimeter security may not be enough

- Allocating resources for implementing security will have tangible ROI

- Security controls are easier to implement at design time

- Integrate threat modeling to SDLC

- Obtain input from security experts during the design

- Bugs are frequent – Early detection will save man-hours, leading to better ROI

- Conduct continuous assessments, Automate security testing and integrate to CI/CD pipeline if possible

- Prefer simpler design

- Keep reuse and evolution in mind – Minimize replication of security code

- Controls are best applied transparently – Prefer established libraries over inhouse implementations

- Use post-implementations “patches” sparingly

- Not everything is code

- Risk management is important

- Security Education will help but not be enough – it will not hurt to address the conceptual gaps, but priorities of developers will always differ from those of security testers

As a parting note, here’s Gartner’s prediction for API security in 2022.

As a corollary to the above, note that there is a brand new category of 2021 OWASP Web Top 10 risks called Insecure Design that ranks in 4th place. OWASP mentions that these vulnerabilities by definition needed security controls that were never implemented. The fact this brand new category ranked 4th in the top 10 web risks should be quite telling.

Just aspire to protect individual components properly and your worries will be minimum.

References

- Lakshmanan, R., 2021. FBI’s Email System Hacked to Send Out Fake Cyber Security Alert to Thousands. [online] The Hacker News. Available at: <https://thehackernews.com/2021/11/fbis-email-system-hacked-to-send-out.html>

- MicroFocus. 2019. Application Security Risk Report - Micro Focus. [online] Available at: <https://www.microfocus.com/en-us/assets/images/cyberres/application-security-risk-report>

- BBC News. 2021. British Airways fined £20m over data breach. [online] Available at: <https://www.bbc.com/news/technology-54568784>

- GDPR.eu. 2021. The GDPR meets its first challenge: Facebook – GDPR.eu. [online] Available at: <https://gdpr.eu/the-gdpr-meets-its-first-challenge-facebook/>

- Information and Communication Technology Agency (ICTA) of Sri Lanka. 2021. Data Protection Legislation – Overview. [online] Available at: <https://www.icta.lk/data-protection-legislation-overview/>

- HackerOne. 2021. The 2021 Hacker Report. [online] Available at: <https://www.hackerone.com/resources/reporting/the-2021-hacker-report>

- Bugcrowd. 2021. Priority One Report. [online] Available at: <https://www.bugcrowd.com/resources/reports/priority-one-report/>

- Votipka, D., Fulton, K.R., Parker, J., Hou, M., Mazurek, M.L. and Hicks, M., 2020. Understanding security mistakes developers make: Qualitative analysis from build it, break it, fix it. In 29th USENIX Security Symposium (USENIX Security 20) (pp. 109-126) [online] Available at: <https://www.usenix.org/conference/usenixsecurity20/presentation/votipka-understanding>

- Veracode. 2020. State of Software Security v11 | Veracode. [online] Available at: <https://www.veracode.com/state-of-software-security-report>

- Gartner. 2021. API Security: Protect your APIs from Attacks and Data Breaches. [online] Available at: <https://www.gartner.com/en/webinars/4002323/api-security-protect-your-apis-from-attacks-and-data-breaches>

- Owasp.org. 2021. A04 Insecure Design – OWASP Top 10:2021. [online] Available at: <https://owasp.org/Top10/A04_2021-Insecure_Design/>